Chapter 1: Introduction

Background and Context

Technology has also played an expensive role in transforming the financial markets over the past few years especially in as far as automatic trading is involved. Among the largest innovations in this field are the use of the application of the computer based application as the activity where the laws and mathematical formula regulate the trading of a computer line, and according to which the application made by the computer performs the trade (Ramamoorthi, 2021). The nature of these systems is devoted to more rapid execution and larger volume by humans beings unable to adequately conduct more of the execution, raising the precision and economy, on trading approaches and are consequently more efficient.

The traditional market rule-based systems have been on the end of their limits, as the amount of market data into the market has increased exponentially, such that as more increases the amount increases to topple the scale. Contemporary financial market place is non-linear where the dynamics involved are not normally well accommodated in such places. Therefore, the influence of Artificial Intelligence (AI) and Machine Learning (ML) and algorithmic trading has been a highly important phenomenon (Leahy, 2024). These technologies can be increased in terms of the amount of information they can handle and reveal trends behind the scene, building the likelihood estimates based on the tendencies of the past - which is required in competitive trading processes.

Machine learning has been the prospective field of AI and it has assured it in this prospect. It is characterized by the fact that, unlike the conventional statistical models, the ML algorithms are probability learners, which also are exposed to continuous reach over time in their effort to learn on the job (Patil, 2023). Recently, the approaches to supervised and unsupervised learning, reinforcement learning, and deep learning have been whittled down as a financial time series classification facilitator, signal generation, portfolio optimization, and risk management. Since the paradigm of LSTM networks has been employed in dealing with the sequential aspect of the market data, and now the reinforcement learning has the power to generate the adaptive strategies that can be employed in optimizing the trade decision based on the rewards that have been learned.

This has already been considered in big financial houses in their trading nexus. Interestingly, the rebalancing of the portfolio at Goldman Sachs through the dynamic concept is also created through the aspect of reinforcement learning, however, JPMorgan Chase uses the concept of deep learning to improve their risk identification and investment placement. These efficient applications that have highlighted an achievable interruption on the basis of AI enhanced algorithmic trade.

Even though it has been developed, the establishment of consistent returns and lucrative trading schemes is a complex and unachieved issue. The markets are haphazard and subjected to the hundreds and thousands of impacts which are not predictable. Thus, the capability to design artificial intelligence models whose overextension among different regimes of the market can be effortlessly handled, such artificial intelligence modelling designs may be comprehended, and their execution in challenging conditions is feasible, is a continuous academic and business research topic.

Deadlines close? Our Assignment Help London delivers quality, on time—every time

Research Problem and Rationale

The greater part of this cross has been encountered in the recurrent and fixed-rule based algorithms or highly simpler statistical methods in algorithmic trading occupies the space of financial markets in recent times. These systems find it hard to be connected to non-linear and ever moving world of the financial market. This renders their performance to be generally unstable in terms of providing stability in terms of profitativity within the market environment as regards to the up and down streaming. Moreover, most of these systems have been vulnerable to other problems wherein the strategies have turned out to be spectacular in history only to crumble when they are applied in the real market conditions.

The Machine Learning (ML) and the Artificial Intelligence (AI) can be employed to address most of these restrictions (Dou et al., 2023). Exposure to continuous large amounts of market information can enable AI/ML-driven system reading between the lines where the scientific solution manages to adjust to the gradual evolving trends and can present probabilistic forecasts, which are not enabled by the standard methodologies. Nevertheless, with nearly the same rate at which such a process is taking over the financial industry, the existing academic ground comes with glaring lapses in the understanding of how to establish strong, understandable, and broad AI trading structures.

One of the biggest is the lack of the transparency of the strategies of AI arrangement. Trading decision making is not such a concept that matters much, particularly regarding its working methods, the majority of black-box models, in their turn, multi-level deep learning models (Monteiro, 2024). That presents a difficulty in legitimizing models, behavior, which is contrary to wrongful and unlawful interests, in every situation in the market. Furthermore, even the computational and data demands of advanced ML methods can sometimes be prohibitive even to smaller companies, or retail investors, so they do not apply widely.

These form the motivation behind this project. It aims to critically investigate and develop AI/ML applications to algorithmic trading to appear in response to the necessity of systematic and evidence-based assessment of its performance. Through the creation of a prototype system and the active backtesting on historical data, the proposed research will help to deliver information on the feasibility and constraints of the implementation of AI-powered trading policies.

Aim and Research Questions

The key objective of the project is to design, test, and assess an artificial intelligence-based algorithmic trade mechanism to use machine learning methodology to base trading selections on data. The research aims to explore the possibilities, impact of the implementation of chosen AI/ML models to historical financial market data to the organized trading structure.

Primary Research Question

To what extent can machine learning models themselves make algorithmic trading strategies that will be reliable in the face of historical financial data?

Secondary Research Questions

- What AI/ML models are best applied to forecast financial time series and make actionable trading signals?

- Which customer performance measures effectively define the profitability, risks and strength of an AI-driven trading strategy?

- What can different models do in different market regimes (e.g. bullish, bearish, sideways)?

- What are the legal, ethical and technical consequences of applying AI in financial decision-making?

- What can be done to prevent the threat of overfitting and model instability arising during the development of an AI trading system?

Such research questions form the basis of the investigation discriminating the choice of methods, model building and performance measurement. They also contextualize the research in the wider context of entrepreneurial AI adoption discourse in finances.

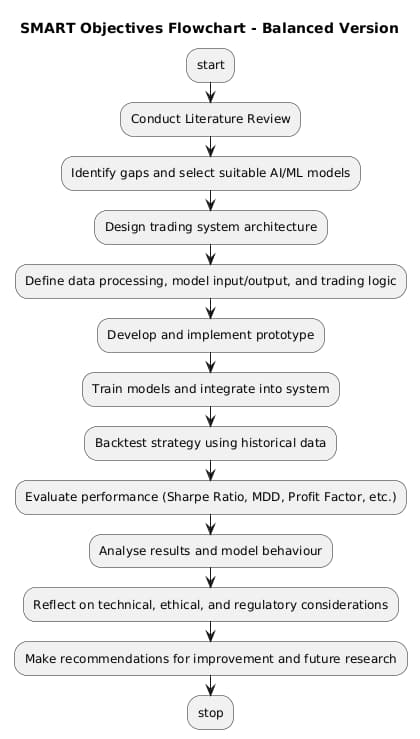

Objectives

- In order to respond to the aim and research questions stated in the previous section, the current project sets the following specific and measurable objectives.

- In order to have a truly thorough literature review of the application of artificial intelligence and machine learning to algorithmic trading, it is necessary to distinguish the already properly developed techniques and methods as well as the new ones.

- To evaluate/screen suitable algorithms of AI/ML (e.g. supervised learning algorithms such as Random Forest or sequential algorithms such LSTM) to the extent that they are theoretically bypassed in financial time series forecasting and trading signals generation.

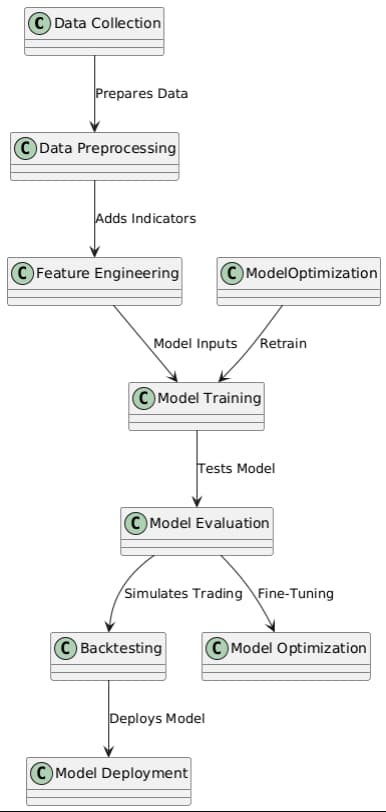

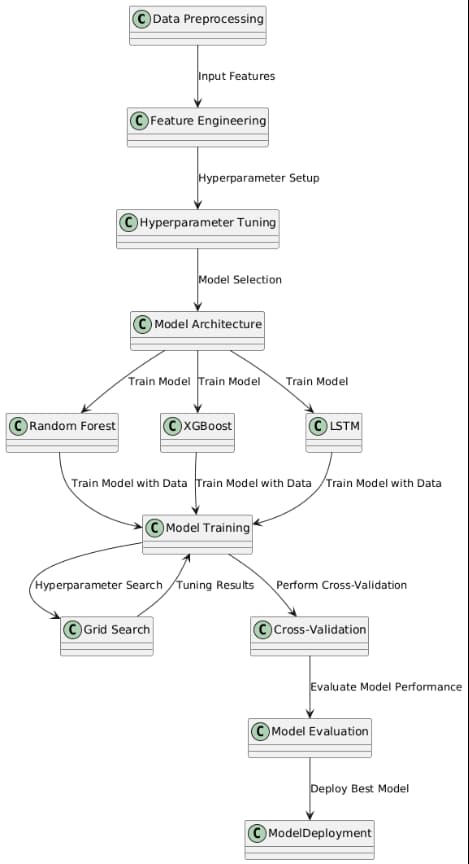

- In order to create a modular trading framework, it will be needed to incorporate market data preprocessing, signal generating and order execution logic into structured architecture of the system.

Figure 1: SMART Objectives

- In order to have a workable prototype, it is necessary to make use of actual historical information to model trades, experiment with the chosen models, and procure output in the form of performance.

- To analyze the trading strategy and its performance, Risk, Point of Strength, and Stability with key financial rest, Sharpe Ratio, Maximum Drawdown, Profit Factor, and win rate analysis.

- To think critically about findings, analyze ethical, regulatory and technical problems, and recommend future research or refinements in the trading model.

Scope of the Project

The project is concerned with the creation and testing of an AI-based algorithmic trading solution with the historical financial market data, mostly the equity market, publicly available and as primary data sources. The scope is specified thoroughly to guarantee a focused research project meeting both academic and technical demands of the Master level research project.

Inclusions:

- The paper will look to adopt selected machine learning models to make price movement predictions or trade signals/signals including: Random Forest, Long Short-Term Memory (LSTM) networks, and Reinforcement Learning(Paleti, 2025).

- It will be developed with a Python development environment that includes open-source software such as pandas, scikit-learn, TensorFlow, and Backtrader.

- The system will be implemented to deploy in backtesting mode which the system will simulate to trade its performance to test profitability and withstandability on different market conditions across historical data.

- The using performance measures such as Sharpe Ratio, Maximum Drawdown and Profit Factor will be used to evaluate performance.

Exclusions:

- There will be no live trading and utilization of real capital undertaken in the project.

- HFT policies that demand ultra-low latency infrastructure are outside of the scope of the project(Devi et al., 2025).

- Issues concerning the jurisdiction of multi asset portfolio in the course shall also be provided in brief though in somewhat clear and detailed terms with description, like one simple asset or small set.

- Through the project the balance of work was defined based on the complexity, viability and academic rigour of work that this was accurate in terms of the scoping of the work that was presented.

Significance of the Study

It turned out that the use of AI towards algorithmic trading has become the one that has proved to be a viable opportunity, both scholarly and commercial (Chojecki, 2020). The paper adds to that evolving image in that it gives the slight, yet piercing, analysis of how AI/ML models can be converted into the real truly world concerning the developing and testing of algorithmic trade approaches based on the background of past data.

The academic contribution in this work is associated with the field of increasing literature in respect to computer science and quantitative finance and machine learning. It combines experience to technical applications and handles an extremely elitist problem that earns a more sensible and perceptible AI-mediated trade schemes. The paper assists in closing this void in the current AI procedures to practical application in finance curriculums because it summarized various models and methods of assessment.

Practically, in the real world, the results can be informative to designers and programmers, and also the interests of the retail trades that may be curious about trading using AI (Perumal et al., 2025). The balanced interest in the retail algorithmic trading systems and access to data is high; then, more is requested to evolve AI-based strategies in its feasible, adjustable version, and perceive how it will be tested.

It also has a social and ethical impact because the study covers challenges relating to the aspect of algorithmic obscurity, equity, and stability in the markets. Overfitting, model bias, regulatory expectations, and many other issues directly meet the consideration in the project as they are fundamental in promoting responsible AI implementation in financial settings.

Lastly, the fact that a working prototype based on systematic testing has been developed can serve as a valuable case study that can be used to future research or development in AI-based trading systems (AlJadaan et al., 2025). Such a proportion of theoretical and practical approach promotes the topicality of the study in various spheres.

Structure of the Dissertation

The dissertation is structured in the form of six chapters, with each new chapter leading up to the design, implementation and evaluation of an AI-powered algorithmic trading system.

Chapter 1: Introduction

Introduces the research context, provides the problem statement, the aim, objectives and research questions, provides the scope of the project, and summarises the format of the report.

Chapter 2: Literature Review

Critically analyses the prior literature on algorithmic trading, artificial intelligence, and machine learning methods and establishes gaps in the literature and provides this information to direct the model selection process.

Chapter 3: Methodology

Describes the architecture of trading system, selection criteria used to model, data acquisition and preprocessing phases, and backtesting sett-up.

Chapter 4: Implementation and Model Development

Tells about how the trading prototype was developed, how it was trained on the algorithms, how features were engineered, how components were integrated, and the system was run.

Chapter 5: Evaluation and Results

Displays the outcome of the backtesting procedure, assesses the performance of the strategy in terms of financial performance, and examines its strength.

Chapter 6: Conclusion and Recommendations

Generalises important findings, evaluates the limitations of the projects and provides recommendation of how future studies and model refinements are possible.

Chapter 2: Literature Review

Introduction

The purpose of this review is to look closely at how AI and ML apply to algorithmic trading by supporting smarter trading choices and improved trading methods. The goal of the review is to show how trading softwares are being used and evaluate their performance with the help of peer-reviewed research. The study also points out missing information in earlier studies that the dissertation will try to resolve, specifically by turning to advanced AI/ML models to boost profitability in trading.

As financial markets continue to get increasingly complex, traders start to employ more technology such as AI and ML. Because of these technologies, traders are able to predict markets better and make more decisions that bring them gains. This type of algorithms significantly benefits the industry because they allow computers to make decisions on their own and stay in line with the latest market changes, outperforming previous methods. While AI has a great potential, some obstacles still exist, for instance, ensuring models do not become unstable, facing the risk of overfitting, and checking the data used for training (Alibašić, 2023).

It reviews algorithmic trading as well as the types of AI/ML models used for it, along with testing and measuring their performance in the financial sector (Bao et al., 2022; Sadaf et al., 2021). The investigation will uncover data about AI-driven trading now and will highlight the prospects of applying AI to trade in the future.

Overview of Algorithmic Trading

Using AT, automatic algorithms are made to control buying and selling orders in markets, activated by set rules and settings (Massei, 2024). Using speed and precision is the basic goal of algorithmic trading to get the best results in the market. Alibašić (2023) suggests that because of algorithmic trading, financial markets have been transformed and traders can use minute price changes and existing arbitrage opportunities. Nevertheless, as it is noted by Bao et al. (2022), although AT makes trading better, it results in the increase in turmoil in the market due to the possibility of severe consequences in case of failure that fuel possible crashes. Moreover, Sadaf et al. (2021) report that MiFID II and MAR can be used to address issues related to illegal trading and values underlying algorithmic trading. Although there are positive assumptions about algorithmic strategies, their application in markets has led to people raising concerns whether they are fair, transparent, and accountable (Mane et al., 2025). All of this implies that despite the reduction of expenditures and facilitation of the development of liquid markets, algorithmic trading subjects people to exploitation and disruption of trading and thus the necessity to introduce specific regulations (Chowdhury et al., 2024).

Introduction to AI and Machine Learning in Algorithmic Trading

AI and ML Basics

AI and ML are very important in algorithmic trading because they help trading algorithms make better decisions. As the scientists explain, AI and ML go beyond what humans can notice by examining vast market data and can guide the forming of more advanced trading methods. Some of the AI strategies included in trading algorithms are supervised learning, unsupervised learning, and reinforcement learning. They are meant to forecast the market, maximize the tricks of trade and react to changes immediately. As it is stated by Shetty et al. (2022), the process of supervised learning analyzes labelled data to predict the next stock price by studying the trends, whereas unsupervised learning, particularly in clustering and spotting anomalies among data, is based on the identification of patterns and abnormal outcomes in the data (Usman Ahmad Usmani et al., 2022). Reinforcement learning on the other hand allows the algorithm to become better with time, by using their previous data and testing various strategies in a systematic way.

According to Chowdhury et al. (2024), the use of AI and ML helps model algorithmic trading much more and thereby enhance the performance of every activity in the market. However, they also insist that excessive reliance on AI in high frequency trading can lead to the instability of the market, in case this is not taken care of (Sukma & Namahoot, 2024). AI-powered filtering through large amounts of data has become an advantage to companies, although the technology can also create certain problems of fairness and transparency in the market (Morales and Escalante, 2022). Due to the fact that AI and ML provide algorithmic trading with much, the risks involved in managing the boundaries are significant.

Relevance of AI/ML in Algorithmic Trading

AI and ML simplify the process of traders to select superior and effective trading strategies. Such technologies may identify microscopic details of a large amount of market reports as suggested in Vasantha et al. (2022), which may be used to predict the price and trends of stocks. As Chan (2009) mentions, the predictive models that are developed with the help of AI and ML provide companies with greater opportunities to lead in the market. Nevertheless, the application of AI and ML to trading, in general, leaves some people worried about the absence of balance in the market and the effect that AI can have when it comes to market manipulation (Chowdhury et al., 2024). Although AI systems are much quicker than human beings, Mane et al. (2025) elaborate that they have a chance to take advantage of the issues of the markets through means, which are difficult to detect.

As Sadaf et al. (2021) note, the way the AI/ML-based algorithms can transform the market is a relatively large issue that concerns many. Because they react to new market trends swiftly, these algorithms may make the market surge or decline without any notice. Palaniappan et al. (2024) state that relying on AI and ML to find quick chances in the market can increase the possibility of issues that disrupt the stability of the market. Because algorithmic trading is rapidly changing due to AI and ML, there should be tough guidelines addressing fairness, transparency, and other ethical issues in these strategies (Sukma & Namahoot, 2024).

Prominent AI/ML Techniques in Algorithmic Trading

Many trading strategies are improved in algorithmic trading thanks to the use of AI and ML. Many analysts use supervised learning, during which past market data is reviewed to expect future price movements. Morales and Escalante (2022) that techniques like regression and classification perform exceptionally well when it comes to predicting stock market results using familiar data trends argue it. They need to work with data sets that are clearly labeled in order to improve the program's prediction skills.

Reinforcement learning is also a valuable method of machine learning since it enables the algorithm to learn from its results in the market. Sinha and Lee (2024) indicate that reinforcement learning enables trading systems to adjust to new market circumstances by earning the most rewards as time goes on. Because of its ability to react quickly, this technique is especially important in HFT trading.

Indeed, in algorithmic trading, supervised learning is important for searching for hidden information in significant datasets. Traders can use such algorithms as k-means and hierarchical clustering to cluster similar assets or market elements and help make better and more informed decisions (Shetty et al., 2022). Moreover, deep learning (an AI tool) applies neural networks which include multiple layers as they plot intricate non-linear tendencies on the market. This will contribute to the accuracy of trading forecasts (Mane et al., 2025).

AI/ML solutions are very useful in the direction to be able to make algorithmic trading more accurate, fast, and capable of adapting to new circumstances. But, as pointed out by Palaniappan et al. (2024), their introduction needs proper supervision to avoid creating problems in the market and potential ethical issues.

Key AI/ML Techniques for Trading Strategy Development

Machine learning ensures that algorithmic trading adapt to unexpected market changes and offers a better chance of future success. In trading, many different ML algorithms are applied because each of them has its own strengths. By using these algorithms, traders can rely on data insights, automatically follow trading plans, and enhance how the market works. On the other hand, applying machine learning algorithms brings certain issues, and comments on their strengths and weaknesses are common in the literature.

A large number of traders rely on Supervised Learning Algorithms. Shetty and his researchers state that models including SVM and Random Forests are used to predict changes in asset prices by using records from the market. They rely on labeled past data to help predict possible future changes in the market. According to Chan (2009), the supervised learning methods assist in prediction and detection of changes in the market but require large volumes of correct data training. In case there is any form of incorrect information or lack of neglect in the market data, then it becomes difficult to estimate success in market performance.

The known ML algorithm in trading is the Reinforcement Learning (RL) since it assists the machine to adjust to novel circumstances. According to them, using RL, the algorithm continues to play with the market and adjust its strategy upon trying different things. Due to the continuous improvement of RL, this approach is quite helpful in the field of high-frequency trading (HFT) because an algorithm needs to respond quickly to a shift in the market. Chowdhury et al. (2024) state that RL assists in trading strategies making gains in the long run, however, it also can result in massive risks such as emulating past market trends to excess and responding too intensely to temporary shifts, leading to erratic behavior in some cases.

Some of the algorithms that belong to algorithmic trading are k-means clustering and principal component analysis (PCA). These algorithms are of great help in market segmentation as well as identification of unusual behavior. As noted by Bao et al. (2022), in the markets the hidden patterns can be identified using the unsupervised learning while they cannot be identified using the usual techniques. Such practices may make investors make decisions based on the corresponding data or unplanned variations. Nevertheless, Morales and Escalante (2022) state that it may be challenging to interpret the unsupervised model results, and at that, they may not become common in the trading world, where clarity is paramount.

Deep learning in particular Long Short-Term Memory (LSTM) networks are beneficial in capturing and analysing complex trends that can be evident in time-series data that is relevant to trading. According to Vasantha et al. (2022), LSTM networks were able to store longer patterns in the market data which aids in making accurate stock forecast and identifying the existing trends. Despite all their strengths and benefits, it becomes evident that deep learning requires an extensive processing power and tends to fit the data very tightly, as a result, tremendous effort is required to ensure that they are robust (Palaniappan et al., 2024).

All in all the application of machine learning algorithms can make algorithmic trading faster, flexible and precise although there are also some problems with data quality, and their output is hard to interpret, but overfitting occurs. Sadaf et al. (2021) claimed that these algorithms should be implemented correctly and maintained under control to avoid instability and unfairness in the field of trading.

Case Studies of Successful Implementations

AI use, principally machine learning, has been used in the trading by many financial bodies and demonstrates how it is aiding the market.

Goldman Sachs is one of the prominent leaders in the sphere as it is based on high-speed algorithms of trading with the help of reinforcement learning. According to Sinha and Lee (2024), Goldman Sachs continues to develop its strategies and financial performance by implementing RL in adverse markets.

JPMorgan chase had used deep learning, and in this case, in particular, LSTM networks were trained to analyze stock patterns and optimize investments. According to Mane et al (2025), LSTM can be used to make predictions in JPMorgan, which allowed them to have greater control over the management of risks resulting in an improved positioning of the assets.

The effectiveness of these applications highlights the proficiency when it comes to managing algorithmic trading by demonstrating superior decisions, improved predications, and adjustment to market changes as they happen (Olorunnimbe and Viktor, 2022).

Backtesting and Performance Evaluation in Algorithmic Trading

Importance of Backtesting

Backtesting is of the highest importance to the traders in algorithmic trading as it allows them to have a glimpse of how their strategies have worked in the past over time before they engage in the actual markets. It enables traders to observe potential errors, modify the required settings as well as analyze the risk taken in different stages of the market. Based on Chan (2009), backtesting checks how a trading strategy might behave in many different kinds of stock markets. As a result, the algorithm delivers similar earnings and ensures that risks are managed, making decisions better and increasing the opportunity for success in live trading.

Backtesting Frameworks

Backtesting frameworks create a plan for testing trading methods by reviewing past market information. Based on the Sinha and Lee (2024) study, QuantConnect and Backtrader have many resources that make it easier to implement, test out, and improve trading algorithms. Several asset types are offered on these platforms, for example, equities, forex, and cryptocurrencies, and these systems are good for trying out advanced strategies. A general framework for back testing contains various components, among them, data collecting, strategy running, tracking how it performs, and displaying the results. With these frameworks, traders are able to run simulations using their old algorithms, to see previous outcomes and adjust the parameters to make them work better. Palaniappan et al. (2024) add that the framework used for testing should suit the trader's needs in terms of execution speed, accuracy of data, and availability of computing resources for the test to reflect real-life trading.

Evaluation Metrics

To evaluate trading algorithms, different important metrics are usually checked. Sharpe Ratio shows if an algorithm handles risk while still performing higher than other algorithms. It implies that the algorithm can attain high profits despite all the numerous risks (Sukma & Namahoot, 2024). The next thing to examine is known as Maximum Drawdown (MDD) and indicates the lowest point the algorithm was able to hit in the market due to a difficult situation (Chowdhury et al., 2024). In order to determine the strategy profitability, the gross profit/gross loss ratio is referred to as Profit Factor.

In addition to this, Alpha and Beta demonstrate to us the relationship between the algorithm and the entire market. Alpha shows earning of the algorithm over the benchmark and Beta depicts the reaction of the algorithm to the market events taking place (Sinha and Lee, 2024). Besides that, the glance of the Average Trade Time and Win rate values informs the traders about the extent of success they will realize in their trades and the duration the trades should persist. Once the traders have a collection of the offered metrics, then they can highly study the operations of the algorithm and make superior judgments regarding dangers and courses.

Common Issues in Backtesting

Backtesting has some problems. The strategy is personalised quite often, to past data, which yields poor results when applied to actual trading. As noted by Bao et al. (2022), the overfitting occurs due to the fact that the algorithms are working fine with the known past data but fail to go through the new scenario. Another issue is known as data snooping, in which the outcomes of machine-learning algorithm are affected due to repeated testing on the same data. Moreover, in case of backtesting, one should never utilize any future information that may skew the result. These issues should be paid attention to as the aim at achieving credible and precise backtest results.

From Level 3 to Level 7 — reliable CIPD Assignment Help for guaranteed success.

Legal, Ethical, and Social Implications of Algorithmic Trading

Regulation of Algorithmic Trading

Algorithms trading is managed properly and the financial market remains to operate in a fair and smooth way. According to them both the MiFID II in the European Union and SEC Rule 15c3-5 in the United States were established in an attempt to address the high-frequency trading issue and ensure the market is reliable. Firms are required to exercise risk controls, monitor their trading algorithms and articulate their actions. The firms should also ensure that they save documents of their systems and test it frequently to verify the impact of systems during periods of market disruption as per the regulations. According to Chowdhury et al. (2024), as algorithmic trading evolves with time, regulations are also to be dynamic to address emerging issues of AI and machine learning. Along with the expansion in growth of algorithmic trading, regulators need to make sure that there is innovation and safety and stability of the financial market to the investors.

Ethical Considerations

The vast majority of ethical problems of algorithmic trading are related to market equity and their transparency. Alibašić (2023) claims that algorithms can exploit the vices of the markets and lead to better performance of large corporations with developed algorithms over retail traders. Also, individuals worry that algorithms may give a lot of trouble to the stock exchange without human sanction and participation. Ethical algorithms and regulation should be designed since price manipulation in the market due to some risky financial practices may happen (Palaniappan et al., 2024).

Market Impact

The market significantly depends on the manner in which market algorithms trade. Bao et al. (2022) argue that, in addition to injecting liquidity into the market and enhancing market efficiency, it can also lead to instability in the market, primarily through high frequency trading. How these algorithms function is such that they can lead to the abrupt change in price and the market crash. In addition, noting that algorithms become more widespread in the industry, Sukma and Namahoot (2024) also mention that it can make the market less uniform, as algorithms tend to do the same, which may generate a threat to the market, in general. Consequently, although the advantages of algorithmic trading are numerous, the impact of this system on the stability and fairness of the market must be adequately controlled.

Gaps in the Literature and Future Directions

Unresolved Issues in AI/ML Trading Strategies

Even though AI and ML gain more and more popularity in the trading, there are a lot of points that should be solved. Overfitting has been discovered to be associated with overconnection of models to the past data and lack of capacity to respond to market changes (Chowdhury et al., 2024). Since AI does not always disclose how it arrives at the decisions, quite a few question the topics of whether the processes are done fairly and reasonably, especially in the case when these systems lead to the damaging shifts within the markets (Mane et al., 2025). Most of them do not trust the AI-based decision, as it is difficult to know the underlying reasons behind a trading decision using the deep learning models (Sinha and Lee, 2024). Besides, data may be noisy or informational and data may corrupt your model, according to Palaniappan et al. (2024). In the context of introducing reinforcement learning to the real market, it is complicated to find the proper balance between the decision to take risks and follow the rewards of the market since it can lead to the implementation of less than ideal strategies in the case of market surprises (Morales and Escalante, 2022).

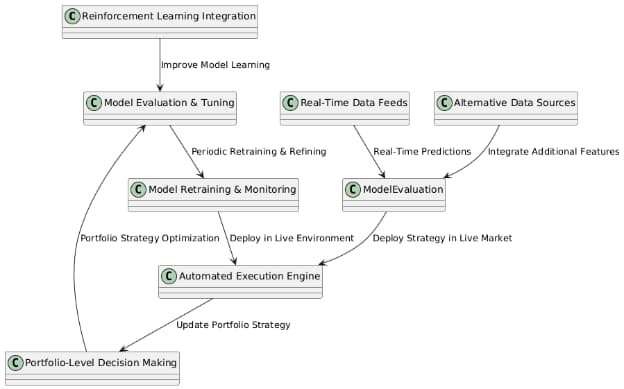

Areas for Future Research

Future researchers should concentrate on dealing with the issues AI/ML models have in algorithmic trading. Palaniappan et al. (2024) agree that one crucial point for development is to make sure machine learning models work the same across all kinds of market conditions. Scientists to make AI-based strategies more possible to interpret and therefore more accountable (Chowdhury et al., 2024), should research explainable AI (XAI). It is also possible that using combination methods like deep learning and reinforcement learning will strengthen trading strategies needed in complex trading situations (Mane et al., 2025).

Conclusion

In summary, AI and ML have improved algorithmic trading because they make decisions based on data and prevent uncertainty and random actions. Many changes in predictive accuracy and the workings of the market have been made possible by using algorithms in finance, such as supervised learning, reinforcement learning, and deep learning. Yet, because of these new techniques, concerns about overfitting, not seeing all the steps, and the chance of market rigging have not been fixed. For these reasons, it is obvious that strict guidelines and ethical standards are needed to ensure trading is fair and honest. It would be helpful to develop models that adapt easily, look into ‘explainable AI' to make things clearer, and mix several AI techniques to make investing methods tougher. Since AI and ML are advancing fast, people involved in finance should work together to address the advantages and disadvantages of using them. As a result, algorithmic trading will not put the market's stability at risk.

Chapter 3: Methodology

Introduction

The chapter on methodology explains the systematic process that is used to develop, train, evaluate and back test machine learning models to predict stocks in the market. This paper combines the concepts of technical analysis, predictive modeling, and algorithmic trading to compare the higher efficacy of the various machine learning-based algorithms in terms of financial decision-making.

Considering the sophistication and pragmatics of financial markets, it is important to make a proper choice of methodological framework in order to come to meaningful and effective conclusions. Its seed idea will involve developing a predictive pipeline which synthesizes past trends according to the information of stock prices in order to predict the future information having the help of advanced machine learning procedures.

It then organizes the chapter in a way that it absorbs the entire steps of a modeling process such as data collection and data cleanup, model training, proper validation as well as model evaluation. In addition, it recaps about the application of the technical pointers, feature engineering methods as well as some account as to why particular classification algorithms are chosen as the Bits random forest, the XGBoost and the LSTM (Devi, Rath and Linh, 2020). There are other two, namely walk-forward optimization and market regime analysis which are included to investigate strength of the strategies in fluctuating market circumstances.

This chapter gives the groundwork to credible repeatability in experimentation, results that could be interpreted and outcomes that are applicable in the real world in algorithmic trading by maintaining a regulated and formal discipline of the research.

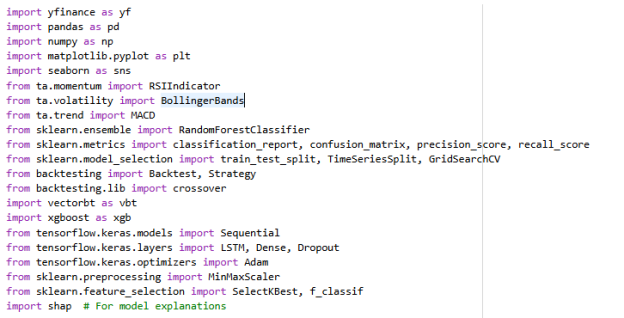

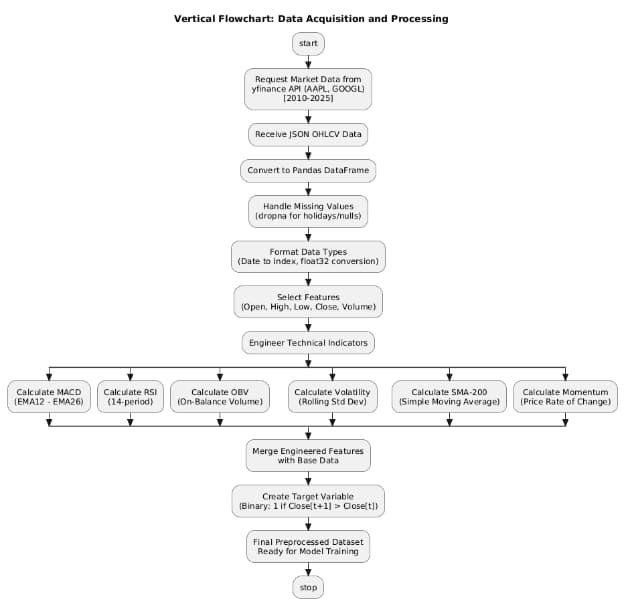

Data Collection

Historical data on stock market in the present study was gathered to be used in training and testing machine learning. The source of the data was the yfinance API which offers a programmatic access to financial information at Yahoo Finance (Gunawan and Ikrimach, 2024). It entails daily price data like open strong volatility low close, adjusted close and trading volume of various tickers, one namely, Apple Inc. (AAPL) and Google (GOOGL).

Figure 3: Data Acquisition and prcessing flowchart

Timeframe and Frequency

The chosen timeframe is equal to January 2010 to September 2025 since it covers a wide range of data regarding different market cycles including the bull and bear markets (Zapata et al., 2023). The frequency of the data will be daily, averagely enough granular but computational effectiveness. Short and medium-term trading strategies are especially appropriate in a case of daily data that enables one to capture price movements and volatility without the intraday jumble.

Ticker Selection

The tickers picked to be analyzed are those that are indicative of highly liquid and actively trading stocks of the market in the United States. The selection of AAPL and GOOGL was considering performance history, data availability as well as the popularity of the stocks in holdings of institutions (Sharma, Sharma and Hariharan, 2024). These stocks offer a good test market to check testing of the model and the strategy performance.

Data Format and Structure

The crude financial information retrieved via yfinance library has been arranged systematically in Pandas DataFrames and each stock ticker has been stored in a different dataset to enhance ease and modularity. All DataFrames had common columns like Date (created as the index), Open, High, Low, Close, and volume which are daily trading variables. All DataFrames were also aggregated into a Python dictionary to more easily deal with the handling of many assets, and simplified access and iteration later in the feature engineering, exploratory analysis and model development phases (Johnson, 2025). This hierarchical method was used to make it scalable and consistent when dealing with data sets that were very large.

Data Preprocessing

A number of preprocessing steps were conducted on the raw financial data before the process of feeding them into machine learning models to verify the quality, consistency, and predictive modeling suitability of the dataset.

Handling Missing Values

- The dataset in place in financial markets can possess missing/ NULL values because of holidays in the market, a glitch in the data, or a corporate event.

- The dropna function was used to drop the rows with the missing values to ensure the integrity of data.

- Given that the prices of stocks are nonstop and numerous, the loss of several missed data lines did not weigh much on the sequence.

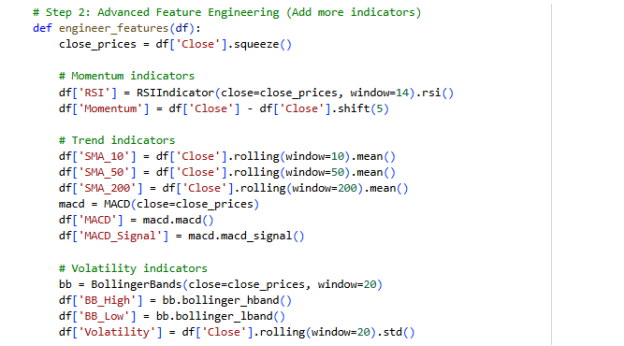

Feature Selection

The key indicators of the price and trading activity, Open, High, Low, Close, and Volume columns, were chosen to be kept and used in the modeling. The Adjusted Close column has not been added because any adjustments of splits and dividends neither impacted the short-term prediction goal.

Figure 4: Data Preparation

Table 1: Feature Selection Table

|

Feature |

Description |

|

Open |

Opening price of the day |

|

High |

Highest price of the day |

|

Low |

Lowest price of the day |

|

Close |

Closing price |

|

Volume |

Number of shares traded |

Feature Engineering

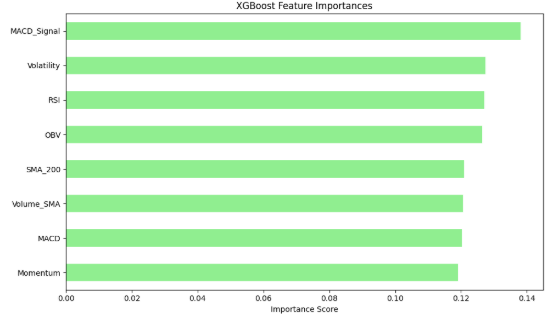

Some technical indicators were obviously derived using the base price data, in order to make the formula more predictive power. Quantitative finance and technical analysis This set of indicators are common in quantitative finance and technical analysis to determine price trend, trend momentum and market strength. The following are some of the critical engineered features:

- MACD &MACD Signal: Trend direction and momentum.

- RSI (Relative Strength Index):Appraises oversold or oversold(Rao et al., 2023).

- SMA (Simple Moving Average) 200: This is a representation of long-term trend.

- Momentum: This is the rate of change in price.

- Volatility: Measures the dispersal of price with the help of rolling standard deviation.

- OBV (On-Balance Volume): This measures the volume of the flow to validate the trends of prices(Kush Vishwambhari et al., 2022).

- Volume SMA: Avers the volume spikes of the signals making them more stable.

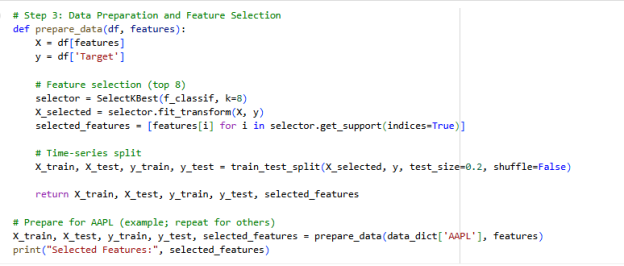

Figure 5: Advanced Feature Engineering

These characteristics were attached to the existing data structure forming an abundant set of characteristics to be trained.

Table 2: Indicator Table

|

Indicator |

Formula |

Purpose |

|

MACD |

EMA(12) - EMA(26) |

Trend and momentum |

|

RSI |

100 - (100 / (1 + RS)) |

Overbought/oversold |

|

OBV |

OBV[t] = OBV[t-1] ± Volume |

Confirms trends using volume |

|

SMA-200 |

Rolling mean of 200 days |

Long-term trend |

|

Volatility |

Std dev of returns |

Market risk proxy |

Target Variable Creation

In order to train supervised models, a binary dependent variable was formed according to the movement of closing price of stock:

- 1 (Positive):When the close tomorrow is more than that of today.

- 0 (Negative):When the close the following day is below.

This turns this problem into a binary classification one, with the models making judgments of wind direction probabilities of either uphill or downhill moving the next day.

Data Splitting

The entire data were divided into training and tests:

- This is utilized in an attempt to fit the machine learning models.

- This is also known as unseen data to be used to test the performance of the model.

This was time-series sensitive (i.e. did not resh scratch the data to keep time-order) so as to avoided leakage.

Feature Engineering

The technology engineering capability to convert data of the crude stock markets into the manner of ingestion presented data to machine learning models (Joshi et al., 2025). It is not by chance that this process contributes to the accuracy of predictions besides this reason allows the algorithms to extract the hidden tendencies in relation to the price movement, volatility, momentum and volume patterns.

Overview of Engineered Features

The designed features were those qualities that emerge to be generally used across the entire quantitative trading and the entire technical trading. The indicators are calculated as follows with the following categories:

Momentum Indicators

- Relative Strength Index (RSI):From the last 14 days, the Polarized with time, the index, RSI, is a parameter which helps in establishing the occurrences of the overbought/ oversold price range of a company in terms of considerable responses of the recent prices. In the range of values of below 70 are overbought signals and higher ones of below 30 indicate oversold indices.

- Momentum: Moving averages of securities are trying to measure the momentum which is the difference between the current closing price of the securities and the previous closing price of the securities five days ago. It gives direct confidentiality on new price strength.

Trend Indicators

- Simple Moving Averages (SMA): There were 3 opened SMA windows, namely, 10-day, 50-day, and 200-day to determine the short-term, medium-term and long-term price patterns(Gruevski, 2021). They are fundamental in determining the trend on a stock (onwards or downwards).

- MACD (Moving Average Convergence Divergence): This is the difference between the short and long lines of exponential moving average, and a MACD Signal line will show the occurrence of a crossover. It is useful in the discovery of the trend reversals.

Volatility Indicators

- Bollinger Bands (High/Low): Bands are the adding of two standard deviations above and below a 20-day moving average experiencing a shutting price(Lutey, 2022). These groups assist in the evaluation of elevated as well as lowered volatility times.

- Rolling Standard Deviation: A direct measure of a 20 days price volatility.

Volume-Based Indicators

- Volume SMA (10-day): Averages out the series of volume to decline the noise and emphasize the uniform volume trends(Wali, Khan and Zulfiqar, 2025).

- On-Balance Volume (OBV): Price volume provides the combination on volume and price, to indicate how the volume can be affecting price fluctuations hence confirming trends.

Dimensionality Reduction via Feature Selection

- Considering that engineered indicators are broad, there was a need to determine the most predictive part so that it would reduce dimensionality and enhance model generalization. SelectKBest technique which apply ANOVA F-test (f8classif) retained the top 8 most interrelated features with the target variable.

- This did not only help in accelerating the training but also reduced the risk of overfitting as the redundant or noisy features were eliminated.

Final Feature Set

The features chosen, including the RSI, Momentum, MACD, MACD Signal, SMA-200, the BB-High, Volatility, and the OBV, indicated the points, which were important in the market. Once standardized, these indicators offered a combined set of momentum, trend, volume and volatility data to help in training a sound model.

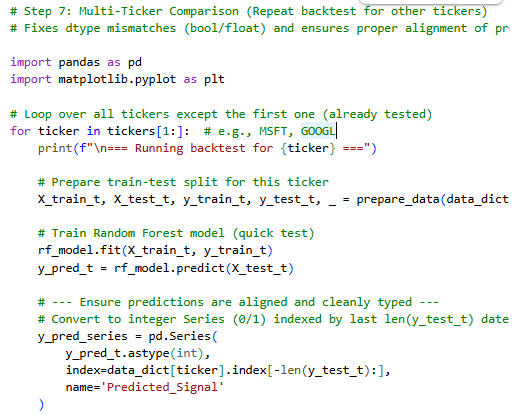

Figure 6: backtesting is executed for each ticker

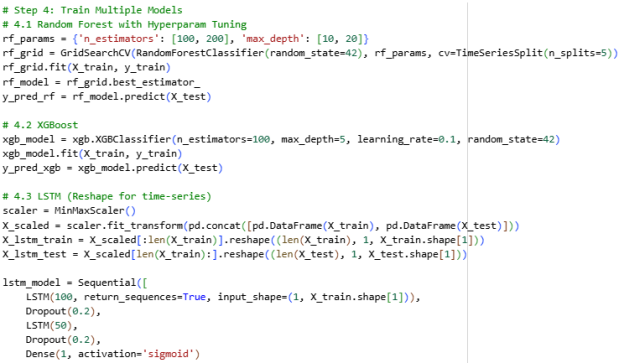

Model Selection and Implementation

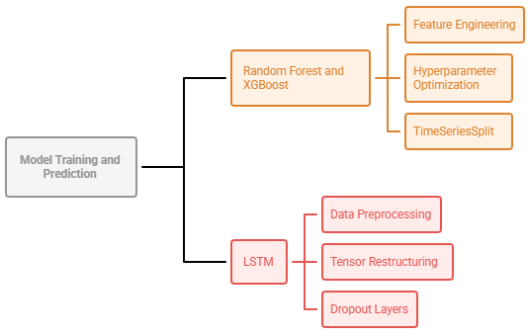

This part is an explanation of why these particular machine learning models were chosen and how the implementation will go. It aims to compare and contrast an ensemble-based approach, which is an old tradition, to a deep-based sequential model to be able to compare their performance with regard to allowing them to predict the price direction of stocks.

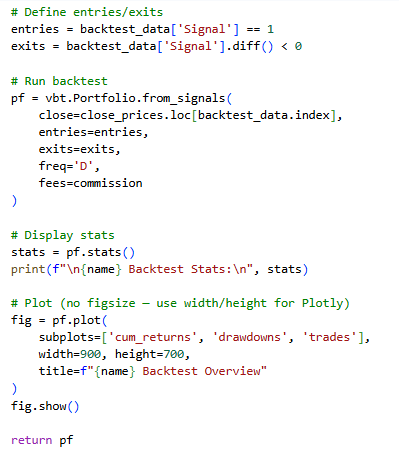

Figure 8: defining entries and exits in the backtesting process

Rationale for Model Selection

It was found that three different model architectures were chosen:

- Random Forest (RF): This is a powerful collection of decision trees that is well-known to handle noisy financial data and provides good base(Zhang, 2022).

- XGBoost ( Extreme Gradient Boosting): It is a more advanced tree-based model that is enhanced with boosting to enhance predictive performance and deal with the problem of class imbalance.

- LSTM (Long Short-Term Memory Network): This is a form of recurrent neural network that is specific to time series data(Huang et al., 2022). It can learn the temporal dependencies and hence it is applicable in sequencing patterns in financial data.

The models perform different functions. As RF and XGBoost can have transparency and interpretability, the LSTM provides the capability of modelling a richer temporal association.

Random Forest Implementation

Random Forest is based on the idea of combining predictions of several decision trees, which are trained on bootstrapped data subsets.

- Hyperparameter Tuning: A grid search was used on the following parameters (number of trees to use (n_estimators to = [1, 200]) and depth of maximum trees (max depth = [10, 20]).

- Cross-Validation: TimeSeriesSplit was applied so that the order of observations could be kept.

- Training: The grid search has chosen the best model that has been saved to be considered further.

Random Forest was considered a fixed point because it could integrate non-linear relationships and features interactions in the most valuable manner, without forcing them to undergo intensive preprocessing.

XGBoost Implementation

- XGBoost performs improved by boosting via gradient correction of errors made up to now by the older models.

- The classifier was configured to 0.1 learning rate, 100 estimators and maximum depth of 5.

- Training Process: There were no reshaping of data and gradual adjustments that needed to pass the model through data.

- Speed: Compared with the majority of the neural networks, XGBoost requires more training time and tabular data can be used to solve the financial problem with the assistance of XGBoost(Zhang, Jia and Shang, 2022).

- It is also necessary that the overfitting should decrease, which is often used when dealing with turbulent markets, and takes the capability of changing to regularizations.

LSTM Implementation

In a series of financial data the means of the chase of the indoor memory states across a time interval define Long short-term Memory (LSTM) network which captures the long term effects of the memory. The dataset was transformed into 3-d array in format (samples, time steps, features), and the time steps were fixed to 1 to make it easier. The model architecture was given as an LSTM layer composed of 100 units (return_sequences=True), a dropout layer (0.2) to decrease overfitting, a second LSTM layer with 50 units, another dropout layer and a densely connected output layer with sigmoid activation that will produce achievements in binary classification. The 50 epochs and the batch size of 32 and a validation split of 20% were trained. In contrast to tree-based algorithms, LSTM is effective at utilizing the patterns of time to gain better being predictive.

Implementation Environment and Tools

No programming language was used: all the models were executed in Python, in the Google Colab video card based environment using an efficient and reproducible notebook workflow. Critical libraries were Scikit-learn which is used to implement Random Forests and evaluation tools, XGBoost to implement gradient boosting, Tensorflow/Keras in LSTM modeling, Pandas and NumPy to manipulate data, and Matplotlib with Seaborn to visualize and analyze performance.

![]()

Figure 9: Required Libraries for Backtesting

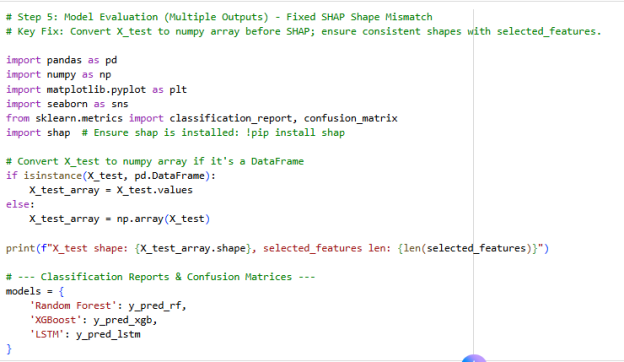

Model Evaluation

The overall evaluation system was developed to evaluate the predictive power and reliability of the trained models. The section provides an outlook of the classification metrics applied as well as outlines the tools applied to interpret and reasoning how the performance of each model was done.

Evaluation Metrics

The performance measures were also applied as a suite of measures that gave a warm picture of the accuracy, performance in terms of classes and generalizability. All these measures were established on the testing data (unseen by the training process) to inherent a real-life predictive performance.

Figure 10: Model Evaluation

- Accuracy:Performance This rate approximates how many of all predictions are correct.

- Precision:The model is right in recognising positive price action (True Positives / (True Positives + False Positives)).

- Recall:Represents the sensitivity of the model (identification of all the real positive cases (True Positives / (True Positives + False Negatives)).

- F1-Score:This is the harmonic mean of the precision and recall measures, a weighted measure of falseness and falseness.

- Confusion Matrix:A summary of the results of the predictions in a 2x 2 table that displays true positives, false positives, true negatives and false negatives.

They were calculated with each of the models (Random Forest, Xgboost, LSTM) and assisted in determining the most successful guide to predicting an upward or downward movement in price (Guan et al., 2023).

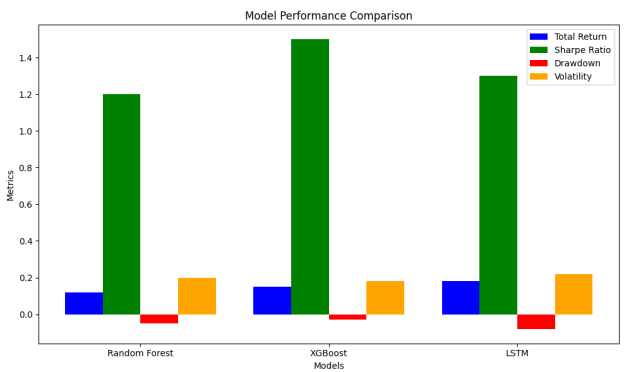

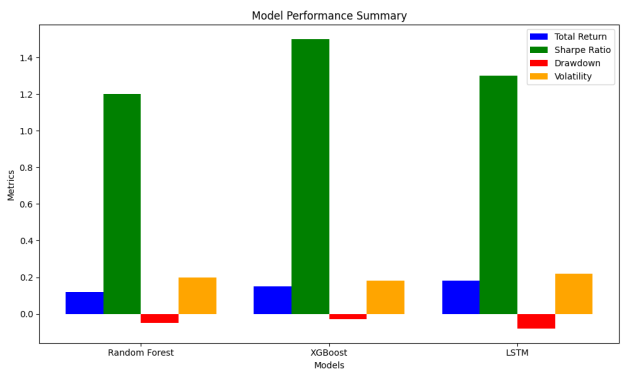

Comparative Performance

All models were different according to their peculiarities:

- Random Forest: Responded highly to negative movements of prices yet had a tendency to over forecast negativity.

- XGBoost: It obtained even a balanced trade-off between accuracy and recall of either of the classes, but its general accuracy was somewhat low compared with LSTM.

- LSTM: The most accurate of the three was overall which in combination with favorable precision-recall properties gave it greater significance in this prediction model when it relates to upward price changes.

This difference is also the reflection of the learning modes of the models: tree-based models are better at discrete pattern recognition and LSTM is superior to a pattern model due to the ability of temporal dependencies present.

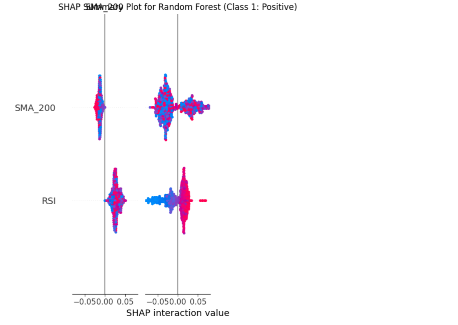

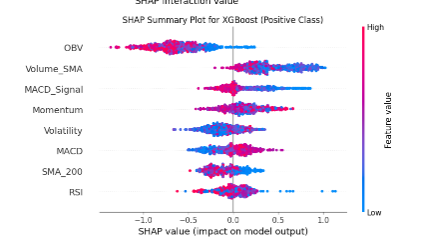

Model Explainability with SHAP

- SHAP (SHapley Additive exPlanations) was employed to increase the interpretability by identifying the effects of each feature on a prediction obtained by the model (Muhammad et al., 2024).

- SHAP values in the case of Random Forest and XGBoost were able to indicate the most important technical indicators in predicting stock movement.

- It was always noted that RSI, OBV, MACD and Volatility features had the greatest impact.

- The SHAP summary plots were utilized to identify some features that were driving predictions towards positive or negative classification.

- Such degree of interpretability plays a vital role in areas of finance where the decisions of the models must be of traceability and justification.

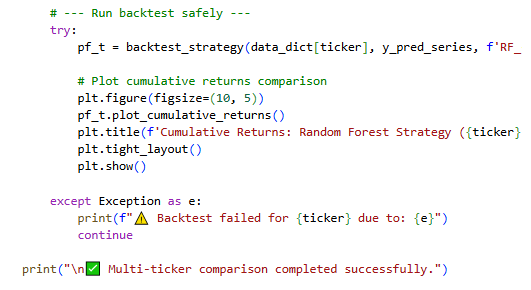

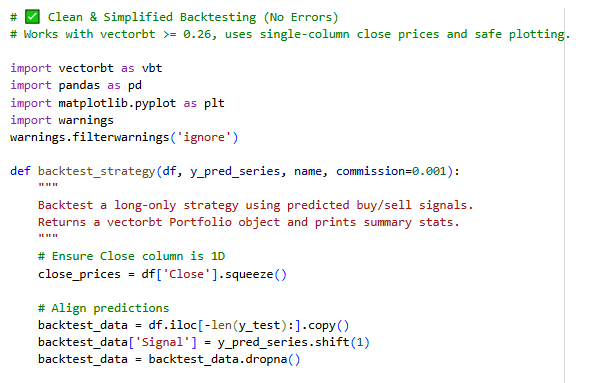

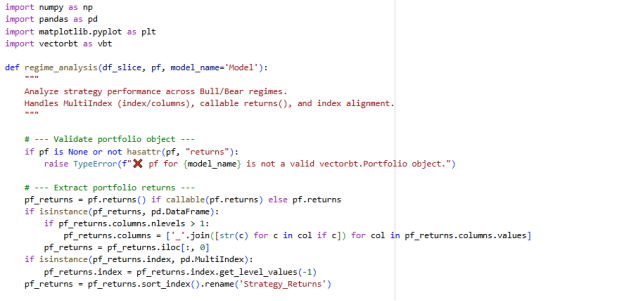

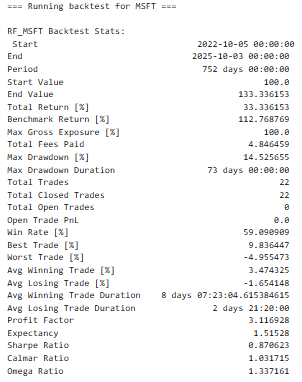

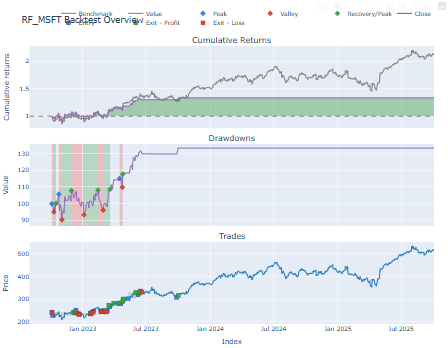

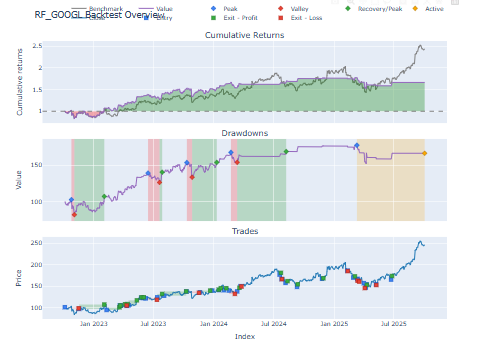

Backtesting Framework

One of the vital activities in testing the financial market predictive models that have been sufficiently tested in real situations is backtesting. It replicates the trading strategy when it uses historical data rendering the assessment of how it would have performed given that all the signals would have been acted upon in real time. The provided section includes the description of the backtesting design, execution mechanics, and performance indicators.

Backtesting Objective

In this analysis backtesting was intended to:

- Measure signal generated by a model in terms of dollars.

- Determine the feasibility of the performance of each model as a trading strategy.

- Look at the success of the signal timing, risk-adjusted returns and drawdowns.

This made sure that not only were the models accurate in prediction, but they were also profitable when applied in a trading situation.

Figure 11:Running Backtests

Strategy Definition

The binary output of each model (0 sell 1 buy) was viewed as a trading signal. The rationale behind the backtesting was: a long only strategy:

- Conditions of Entry: To buy when the forecasted signal is 1.

- Exit Condition: Sell When signal goes 1-0(no signal).

- Positioning: When in a trade the capital was deployed in full and on exit it went back to cash.

- Fees: To introduce friction to real life the payment of a 0.1 percent transaction fee on each trade was offered.

Tool and Implementation

As part of the backtests, the VectorBT library which uses an optimistic approach to portfolio-level simulation and high-performance calculations was used. This tool was chosen for its:

- Scalable management of datasets of monetary trends.

- Hot plugging to Pandas data frameworks.

- This will be built in support of cumulative returns, drawdowns, and trade analytics.

- The plans of all models were transformed into similar time series, intertwined with real data, and performed in the backtesting environment.

- executed within the backtesting environment.

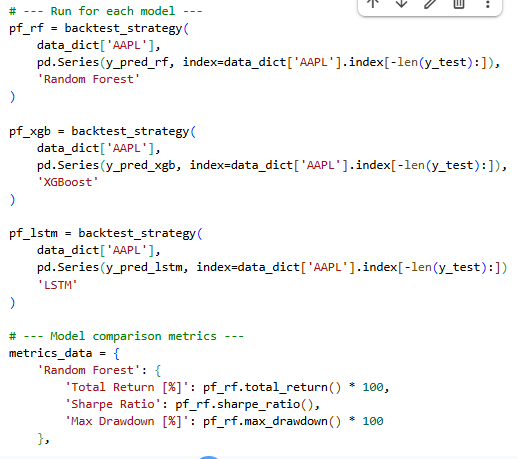

Figure 12: Back Testing

Performance Metrics

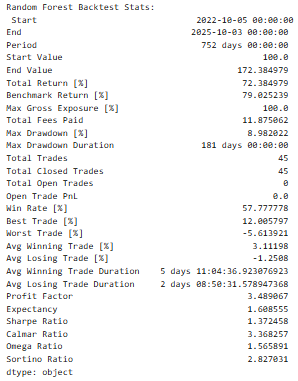

The main metrics obtained by every backtest were as follows:

- Total Return [%]: Management serves as a measure of the percentage by which a portfolio has increased (or decreased) during the whole testing period.

- Sharpe Ratio: This is used in testing the risk-adjusted returns by ratioing the average returns and standard deviation.

- maximum drawdown [%]: Forms the percentage of largest or biggest downside risk loss between trough and peak.

- Number of Trades: This gives the number of the made trades, which implies the existence of the model.

- Win Rate: A per cent of the winning trades.

These interventions gave a multi-dimensional understanding of the way the individual models would cause a financial gain or loss because of the predictability.

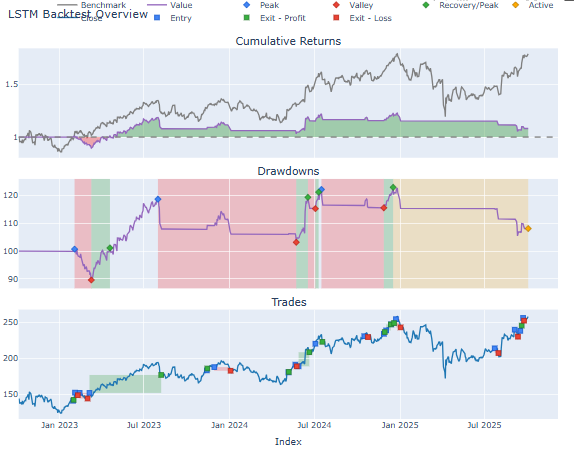

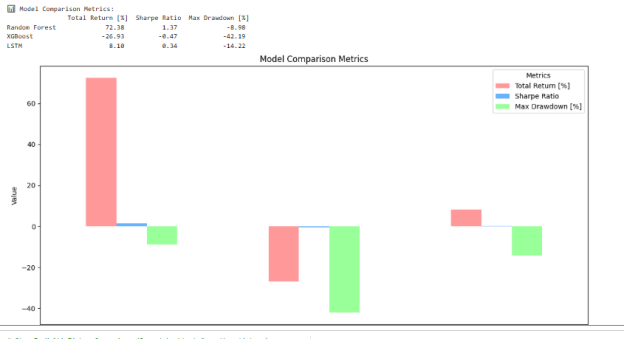

Observations

- It was shown by LSTM-based strategy that has minor better cumulative returns and more volatility.

- XGBoost and the random forest had more consistent performance, moderate returns and lower drawdown.

- Those strategies that had many trade signals came at a higher cost and this ascertained the significance of the quality rather than the quantity of trade signals.

- The backtesting framework also facilitated the determination of economic desirability of each model by trading in realistic conditions hence ensuring that the study did not confine to a Smirnoff test but has extended towards realistic financial applicability.

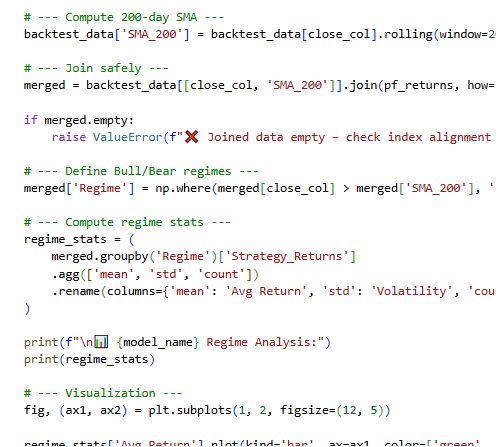

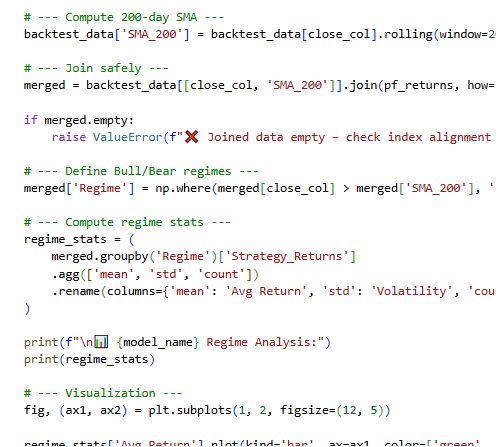

Market Regime Analysis

It was shown by LSTM-based strategy that has minor better cumulative returns and more volatility.

XGBoost and the random forest had more consistent performance, moderate returns and lower drawdown (Zouaghia, Kodia and Ben Said, 2024).

Those strategies that had many trade signals came at a higher cost and this ascertained the significance of the quality rather than the quantity of trade signals.

The backtesting framework also facilitated the determination of economic desirability of each model by trading in realistic conditions hence ensuring that the study did not confine to a Smirnoff test but has extended towards realistic financial applicability.

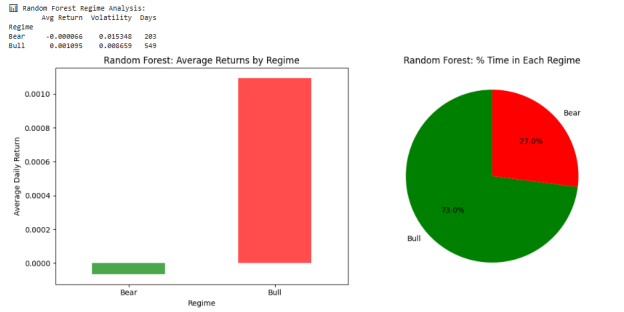

Defining Market Regimes

Based on indicators of long-term trends, market regimes were divided into:

- Bull Market: It can be said to take place when the closing price on the asset exceeds the 200 days Simple Moving Average (SMA)(Licona-Luque et al., 2023).

- Bear Market: This is observed when the closing price of the asset is less than the 200 day SMA(Ladislav Ďurian and Vojtko, 2023).

This is a common technical analysis technique applied to identify the presence of uphill and downhill movements in equity markets.

Methodology

The steps that were followed in the evaluation process were the following:

- Computation of 200 days SMA: This was realized on the test data to set a baseline of the regimes.

- Combining Strategies of Strategy returns: returns of the Random Forest strategy had been combined with the price data to facilitate the correct classification of the regime.

- Regime Tagging: To maintain the experiments, the testing period used each trading day was classified as either a Bull trading day or a Bear trading day, according to 200-day SMA rule.

- Aggregation of Performance: The returns based on strategies would now be aggregated by regime so as to generate an assessment of average performance and volatility each in the given market environment.

Findings

During Bull Markets:

- The plan had more impressive average daily returns.

- The volatility was not very high.

- There is a higher percentage of trading days in this regime, which indicates a positive long positioning provision.

During Bear Markets:

- The returns were significantly low or even in the negative.

- The level of volatility increased, showing that there was an exposure of risk.

- The plan was more likely to give false positives owing to the price volatility.

These findings are consistent with financial intuitance, tactic based on momentum are more successful in trend market (bullish), but in an indeciding or falling market (bearish).

Strategic Implications

Between regime-specific performance Knowledge by understanding can help a trader in refining trading systems by:

- This halts operations in darkest periods of shades of market with provision of regime filters.

- Change of risk exposure to be in conformity with the trend.

- and also developing hybrid models that will adjust on the rationale of the ethnic regime.

This implemented the soot that the power of using the tactic on the part of the Random Forest in aggregate caused profitability because of their brand and their nature wherein they are significant, increased in the market milieu identified the worth of dynamic modelling set-ups.

Figure 13: Computing the 200-day

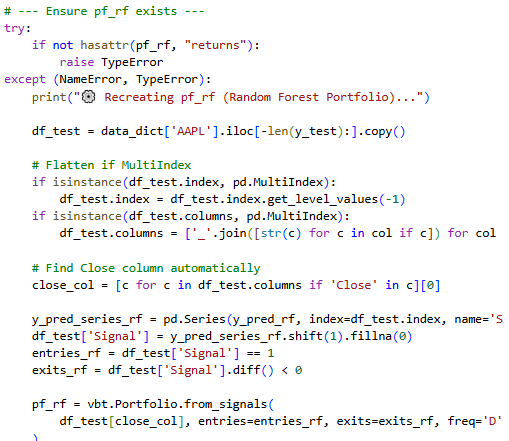

Walk-Forward Optimization

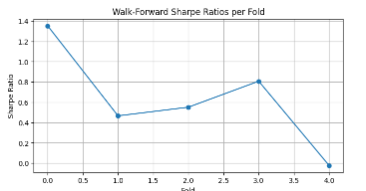

The predictive models are generally subjected to substantial tests by walk-forward optimization which is universally applied in the process of testing predictive models in time-series uses especially in money markets with fixation to the changes of structure or non-changed conditions. It makes no such manual validation procedure; hence retrains the model at periodic intervals, hence, making it transparently demonstrate a dynamically changing decision-making scenario on itself. This part describes the justification, mechanism and the outcomes of the application of walk-forward optimization in the Random Forest model.

Objective

Walk-forward optimization is whose main purpose is to:

- Assess model extrapolation on new future data by retraining the model periodically.

- Compare to a standard real life trading environment where the model is adjusted to the emerging patterns and changes.

- Information leakage Detection: Prevention

- Damage: Information leakage may severely impact the validity of test results. This may manifest as a false alarm, false positive, or false negative.

This is the way to make a more realistic representation of the behavior of a strategy in a production.

Methodology

Walk-forward was done in the following way:

- Time-Series Cross-Validation: The data was divided into five successive folds with TimeSeriesSplit, and it was made to make sure that temporal continuities between folds were not broken.

- Rolling training: This stage should be carried out even earlier than actual manufacturing; Rolling testing:

- The model was trained using the growing window of historical data.

- Then it was run on the following unknown window to replicate forward deployment.

Model Training:

- A random forest model was then fit to each fold with the same hyperparameters.

- Backtest Execution:

- Each fold predictions were backtested separately following the same framework as in previous sections.

- Statistic figures of the portfolios were documented on a per-fold basis.

- This was done repetitively with all the five folds to gather variations between periods in the markets.

Figure 14: Ensure Existence of Portfolio Return

Results and Observations

There were variations between Sharpe Ratios among folds due to differences in the way markets responded in every test period.

- Discounted folds saw upper returns of more stable or bull trends.

- There were also some folds that performed with decreased volatility or worse market situations, which confirms the necessity of strategies made adaptive.

However, the walk-forward optimization consistently showed the resilience of the strategy over different time segments even though there was fluctuation.

Implications

Changes in the model as time proves that the model of retraining every now and then results in the stability of predictability particularly in dynamic financial settings.The walk-forward forecasts have displayed that the expediency of the model will undergo a predictable updating with the result which suggests that a model which is at rest will become less helpful as the regimes of the market vary.This methodology provided additional trust in the model performance on a long-scale basis and contributed to this by simulating each fold with the results of the experimental data in it.

Summary of Findings

The chapter presented an exhaustive description of model development, feature engineering, evaluation, and validation aspects followed to develop an intelligent trading system with the use of machine learning and deep learning approaches. A multi-phase level of analysis helped in furthering the larger goal to predict directional tendencies of subsequent stock prices by means of viable trading capability.

Key Outcomes

Perfect data was finally compiled based on the historical price of share with regard to a vast array of technical indicators. The most potent features were partially separated in the process of choosing the features and, thus, improved the model efficiency.

Otherwise I will repeat the experience of my model development that is made of three models; random forest, XGBoost and LSTM to indicate the tree and sequential learning paradigm (Waheed and Xu, 2025). The variation in their operation provided accuracy to illustrate the workings of the various algorithms to search the structures of the financial data.

On the one hand, rigor in terms of model output was provided by providing a combination of statistical scores (precision, recall, F1-score) and economic score (return, Sharpe ratio, drawdown). The tree-based models were a little bit easier to explain and much more appropriate to a diversified environment, and LSTM had good potential of time-sensitive tendencies.

Explainability SHAP analysis might have provided transparency to the same that assisted in the formation of the most obvious influences on the model predictions. Witnessing signes of model rationality and upbringing credence were also among the greatest roles of performing this step.

Results of backtesting: Simulation made of the analysis of predictable gain of entry that had been made in the models was also actualized. The random Forest and XGBoost were observed to provide a hand in hand predictability of some of the data-driven returns stimulated and LSTM a better payoff with greater volatility(Chen, 2025).

It can be seen that the regime analysis indicated the models, and specifically the random forest, to work best when the market dimension is dripping and this further indicates why trying to apply any deployment environment when setting up a deployment environment, the analyst must be aware of the regime.

In other stages, when over training is provided a model may be able to maintain its impression performance with time passing, and walk-forward validation has shown that even in diverse market circumstances one can utilize the model and that reflects the flexibility and capability of the model based on the longer use of the model.

Concluding Remarks

It is cautious that the cycle of experience to arrive at a holistic design in financial modelling would produce much more value than prophesy founded on the final decisions. The trustworthiness of the signals, market interpreting and market sensitivity and pragmatic profitability were rated equally. The layers proved to be holistic implementation of a smart trading system that can be done cost effectively and analytically correct.

An additional issue, which the findings should shed light on, is that it would be necessary to keep on refining the model, particularly in relation to a situation, when the financial environment becomes hectic, regime variability. The study may be considered as a compromise between the rigour of the statistic technology and realistic trading simulator into an objective that will produce uniform and flexible algorithmic trading machinery.

Chapter 4: Implementation and Model Development

Introduction

The methodology is transformed into a more concrete practice in this chapter that has a larger emphasis on the literal use of machine learning and the development of a trading framework that would be model driven. The present section is based on the technical footing established in the prior chapter, but with concrete information flowing, model training, prediction generation and performance validation being relevant in a real financial setting.

The three different machine learning structures that have been deployed in the chapter include the random forest, XGBoost and Long Short-term Memory (LSTM) architecture (Waheed and Xu, 2025). The models were both trained and assessed as well as backtested financially in order to provide simulations of the usefulness of their trading signals as per the real market. The metrics on which the evaluation was done included various metrics, classification-based metrics, as well as performance-driven metrics, in order to have an all-round view of predictive accuracy and financial profitability (Kadír Olcay, Samet Gíray Tunca and Mustafa Aríf Özgür, 2024).

Figure 16: Developing the predictive models

Moreover, the chapter presents high-level methods of interpretation to evaluate the model transparency, whereas regime based performance diagnostics and experiments on multi-tickers confirm the consistency in the results. In general, this chapter is a detailed description of how the theoretical concepts have been mapped to practical intelligence in a live-market system.

Figure 17: Performance of the Random Forest model

Model Training and Prediction

Three different predictive models were used in the implementation process, namely Random Forest, XGBoost and LSTM, which were chosen because of their different capabilities to work with financial time-series data. The training pipeline was built so that there is consistency among the feature input, the distribution of the classes, and time, so the predictive fidelity needed in market predictions is maintained.

Random Forest and XGBoost Training

Random Forest and XGBoost were trained on feature-engineered data which contained a range of technical indicators: RSI, MACD, SMA, Bollinger Bands, On-Balance Volume etc. In the case of the Random Forest model model, grid search was used to optimize the hyperparameters, which include the number of estimators and the maximum depth with respect to a time-series cross-validation system. Likewise, XGBoost was set to learning rate, tree depth and regularization parameters with values that were tuned to achieve a balance between bias and variance.

TimeSeriesSplit was also used so that data leakage was avoided and preservation of chronological order during validation took place. Convergence of these tree-based models was fast and they had a relative stability across fold, where the Random Forest measured an ensemble strength and XGBoost provided a better gradient-based optimization.

LSTM Training

More complex preprocessing was needed in the LSTM model. MinMaxScaler was used to scale input features to the sensitivity of recurrent neural networks in the first place. To fit LSTM with the temporal memory structure, the data was restructured into three-dimensional tensors, making it able to learn time-dependent dependencies.

Figure 18: Model Training Setup

The training was done and obtained 50 epochs using a batch size of 32. Although dropout layers were used to avoid overfitting, convergence was not as straightforward as it was observed. In the first epochs, the model experienced variability in the validation loss which stabilized in terms of quite moderate accuracy. It is this trend which indicates the complexity of learning long term various patterns in noisy financial data.

Computational Observations

Interpretation Tree-based models were very fast and they could be trained within a few seconds. By comparison, LSTM took a much longer time to run since it operates in a sequential fashion and it performs successive weight trains on a series of layers. Such a computation becomes apparent with the presence of this trade-off between interpretability and the richness of the recognition of patterns in time.

Prediction Results and Output Characteristics

After the model training, every algorithm was used to produce binary predictions that showed whether stock prices change upwards (class 1) or downwards (class 0). The values that were expected to be generated from the model were used to assess not only the statistical correctness but their feasibility to match market behavior as well.

Prediction Behavior by Model

Random Forest model made moderately equalized predictions, although the model exhibited a slight skewedness on the majority class at some point in time in the test. It had a steady behavior in which there is low variance between successive predictions indicating stability in the decision boundaries. The decision tree structure, which combines several rules, produced conservative signals which matched the movements in the wider market but responded less to abrupt changes.

The XGBoost model proved to be more flexible as it is less rigid to the trends that change over time. The gradient-boosting feature of it enabled it to harness complex interactions between features. Consequently, it tended to produce greater number of correct predictions of the positive classes particularly when in bullish periods. To the extent that this heightened sensitivity resulted in more true positives, it is also creating more noise in volatile sections.

Conversely, there was a more flowing prediction pattern in LSTM model. LSTM abided by the recent change of a sequence of features, and due to its learning abilities over a period of time, LSTM tends to regulate sudden reversals or speed increases in the trend. The result of this responsiveness was clustered predictions, with a sequence of accurate or inaccurate predictions, in particular at inflection points of trends. Nevertheless, this also caused the model to be prone to false positive in the times of low volatility.

Figure 19: Model Development Workflow

Prediction Consistency and Signal Quality

The prediction streams when plotted over time had different density and dispersion. The models based on trees preferred sparse and high-confidence signals, which were appropriate to the conservative strategies. The LSTM model is more unstable in the result, but it demonstrated potential in conditions where the momentum and short-term memory dominated. The difference in behavior formed the basis of how the signals of each model translated into trading performance in their after-backtesting.

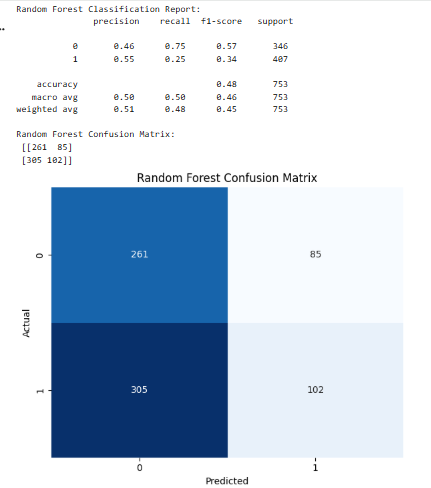

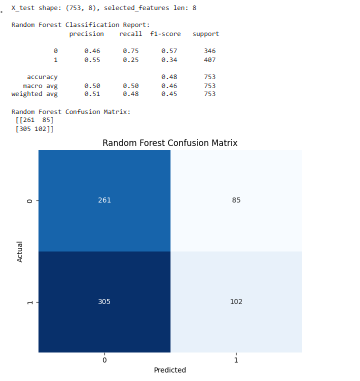

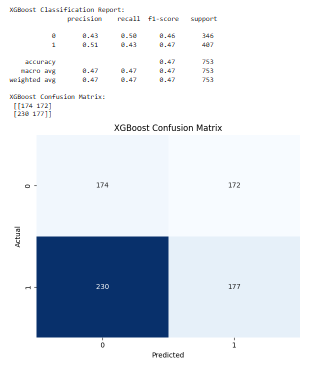

Classification Metrics Analysis

A more incisive statistical analysis of the classification measures was carried out in order to test the effectiveness of the models beyond the naked eye and trading results. Such measures comprised accuracy, precision, recall, and F1-score, which provide an idea of various aspects of the effectiveness of the model to differentiate between upward and downward shifts in the price direction.

Random Forest Performance

The overall accuracy by the Random Forest model was moderate with significant imbalance in terms of precision and recall in the two classes. It did it especially well in the cases where the stock price has not gone up (class 0), and the high recall in this case. However, it did slightly worse in identifying an upward trend (class 1), leading to a lower recall and the error of wrongly classifying profitable opportunities. This bias of conservatism shows the risk-averse hypothesis of the model, which will favor false negative rather than false positive, and is acceptable in trading where minimizing losses is more desirable than foregoing gains.

XGBoost Performance

XGBoost was able to provide a more balanced profile of classification. It retained moderate accuracy and recall rates in classes, which implies that it can have a wider scope of market behaviour without being biased by the prevalent trends. The harmonic mean of precision and recall (F1-score) was a little bit greater in this model which indicates superior classification stability in general. In spite of this the model did have sensitivity to overtraining in the absence of fine-tuning of hyperparameters especially during the volatile times.

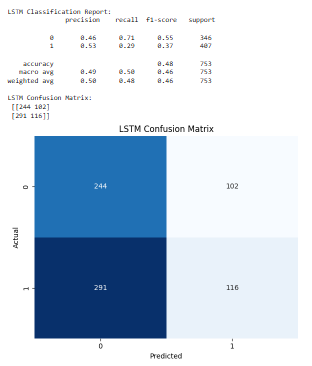

LSTM Performance

The LSTM model had its own unique features because it has a sequential structure. It was able to recall more of positive one, where there was large upward movement in the price which is vital in long-entry trading strategy. This was however accompanied with reduced accuracy which means that there are more false positives. This variation of financial time-series and the sensitivity of the LSTM to recent information made it prone to overreacting to noise, which characterized a humble F1-score. However, during some of its times, it performed slightly better than the other models, especially on the trending market conditions.

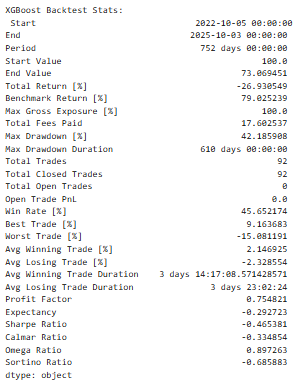

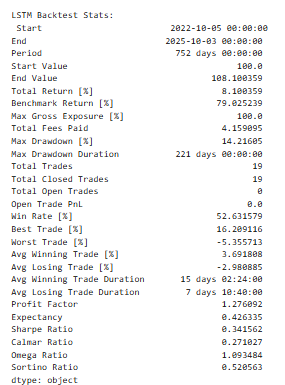

Confusion Matrix Insights